GPT-4 can ace the bar, but it only has a decent chance of passing the CFA exams. Here's a list of difficult exams the ChatGPT and GPT-4 have passed.

OpenAI's buzzy chatbot, ChatGPT, has already passed medical, law, and business school exams.

And its newest model, GPT-4 can ace the bar and has a reasonable chance passing the CFA exam.

Insider rounded up a list of the assignments, quizzes, and tests both models have passed.

Since OpenAI launched ChatGPT last November, people have been putting the chatbot to the test literally by using it to write exams and generate essays. While the bot has performed reasonably well at the high school level, and even the graduate level on occasion, it certainly makes its share of mistakes, too.

But then, in March, OpenAI released GPT-4, its most advanced model to date. The deep learning model can comprehend and discuss pictures and generate eight times the text of its predecessor, ChatGPT, making it a significantly sharper exam-taker.

If you're wondering exactly how smart these generative AI tools are, check out some of the difficult exams they've attempted, aced, and failed.

GPT-4 has a shot at passing the CFA exam — but ChatGPT? Not a chance.

GPT-4 has a "decent chance" of passing the CFA level I and level II exams with appropriate prompting, while ChatGPT would not pass under all settings that were tested in a study from a team of researchers from Queens University, Virginia Tech, and J.P. Morgan's AI research division. The model struggled more with level II than level I, the researchers said, noting that there's "no consensus" on which level is more difficult for exam takers.

GPT-4 performed better than ChatGPT in almost every topic, the researchers found.

The series of three exams it takes to obtains your CFA is notoriously difficult for humans, too. Pass rates for Level I, II, and III fell between 37% to 47% in August 2023, according to the CFA Institute.

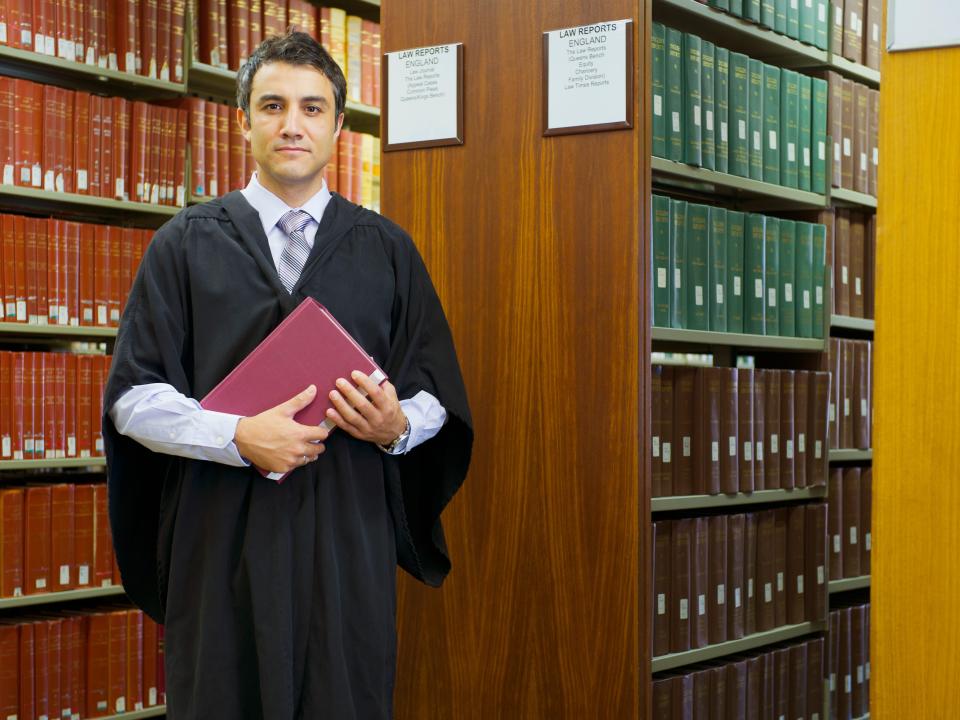

GPT-4 scored in the 90th percentile of the bar exam with a score of 298 out of 400.

While GPT-3.5, which powers the free version of ChatGPT, only scored in the 10th percentile of the bar exam, according to OpenAI.

The threshold for passing the bar varies from state to state. In New York though, exam takers need a score of 266, around the 50th percentile, to pass, according to The New York State Board of Law Examiners.

GPT-4 aced the SAT Reading & Writing section with a score of 710 out of 800, which puts it in the 93rd percentile of test-takers.

Meanwhile, GPT-3.5, scored in the 87th percentile with a score of 670 out of 800, according to OpenAI.

For the math section, GPT-4 earned a 700 out of 800, ranking among the 89th percentile of test-takers, according to OpenAI. While GPT-3.5 scored in the 70th percentile, OpenAI noted.

In total, GPT-4 scored 1410 out of 1600 points. The average score on the SAT in 2021 was 1060, according to a report from the College Board.

GPT-4's scores on the Graduate Record Examinations, or GRE, varied widely according to the sections.

While it scored in the 99th percentile on the verbal section of the exam and in the 80th percentile of the quantitative section of the exam, GPT-4 only scored in the 54th percentile of the writing test, according to OpenAI.

GPT-3.5 also scored in the 54th percentile of the writing test, and earned marks within the 25th percentile and 63rd percentiles for the quantitative and verbal sections respectively, according to OpenAI.

GPT-4 scored in the 99th to 100th percentile on the 2020 USA Biology Olympiad Semifinal Exam, according to OpenAI.

The USA Biology Olympiad is a prestigious national science competition that regularly draws some of the brightest biology students in the country The first round features a 50-minute open online exam that draws thousands of students across the country, according to USABO's site.

The second round — the Semifinal Exam — is a 120-minute exam with three parts featuring multiple choice, true/false, and short answer questions, USABO notes on its site. Students with the top 20 scores on the Semifinal Exam will advance to the National Finals, according to USABO.

GPT-4 has passed a host of Advanced Placement examinations, exams for college-level courses taken by high school students that are administered by the College Board.

Scores range from 1 to 5, with scores of 3 and above generally considered passing grades, according to the College Board.

GPT-4 received a 5 on AP Art History, AP Biology, AP Environmental Science, AP Macroeconomics, AP Microeconomics, AP Psychology, AP Statistics, AP US Government and AP US History, according to OpenAI.

On AP Physics 2, AP Calculus BC, AP Chemistry, and AP World History, GPT-4 received a 4, OpenAI said.

GPT-4 still struggles with high school math exams.

The AMC 10 and 12 are 25-question, 75-minute exams administered to high school students that cover mathematical topics including algebra, geometry, trigonometry, according to the Mathematical Association of America's site.

In the fall of 2022, the average score out of 150 total points on the AMC 10 was 58.33 and 59.9 on the AMC 12, according to the MAA's site. GPT-4 scored a 30 and 60, respectively, putting it between the 6th to 12th percentile of the AMC 10 and the 45th to 66th percentile of the AMC 12, according to OpenAI.

While it's notoriously difficult to earn your credentials as a wine steward, GPT-4 does pass examinations to become a sommelier.

GPT-4 has passed the Introductory Sommelier, Certified Sommelier, and Advanced Sommelier exams at respective rates of 92%, 86%, and 77%, according to OpenAI.

GPT-3.5 came in at 80%, 58%, and 46% for those same exams, OpenAI said.

ChatGPT fares reasonably well on some sections of a Wharton MBA exam but struggles with others.

Wharton professor Christian Terwiesch recently tested the technology with questions from his final exam in operations management— which was once a required class for all MBA students — and published his findings.

Terwiesch concluded that the bot did an "amazing job" answering basic operations questions based on case studies, which are focused examinations of a person, group, or company, and a common way business schools teach students.

In other instances though, ChatGPT made simple mistakes in calculations that Terwiesch thought only required 6th-grade-level math. Terwiesch also noted that the bot had issues with more complex questions that required an understanding of how multiple inputs and outputs worked together.

Ultimately, Terwiesch said the bot would receive an B or B- on the exam.

ChatGPT passed all three parts of the United States medical licensing examination within a comfortable range.

Researchers put ChatGPT through the United States Medical Licensing Exam — a three part exam that aspiring doctors take between medical school and residency — and reported their findings in a paper published in December 2022.

The paper's abstract noted that ChatGPT "performed at or near the passing threshold for all three exams without any specialized training or reinforcement. Additionally, ChatGPT demonstrated a high level of concordance and insight in its explanations."

Ultimately, the results show that large language models — which ChatGPT has been trained on— may have "the potential" to assist with medical education and even clinical decision making, the abstract noted.

The research is still under peer review, Insider noted based on a report from Axios.

While ChatGPT has generated convincing essays on occasion, it's also raised eyebrows for spewing out well-written misinformation.

It didn't take long after ChatGPT was released for students to start using it for essays and educators to start worrying about plagiarism.

In December, Bloomberg podcaster Matthew S. Schwartz tweeted that the "take home essay is dead." He noted that he had fed a law school essay prompt into ChatGPT and it had "responded *instantly* with a solid response."

—〽️atthew S. Schwartz (@SchwartzReports) December 7, 2022

In another instance, a philosophy professor at Furman University caught a student turning in an AI-generated essay upon noticing it had "well-written misinformation," Insider reported.

"Word by word it was a well-written essay," the professor told Insider. As he took a more careful look however, he noticed that the student made a claim about the philosopher David Hume that "made no sense" and was "just flatly wrong" Insider reported.

In an interview in January, Sam Altman— CEO of OpenAI which makes ChatGPT — said that while the company will devise ways to help schools detect plagiarism, he can't guarantee full detection.

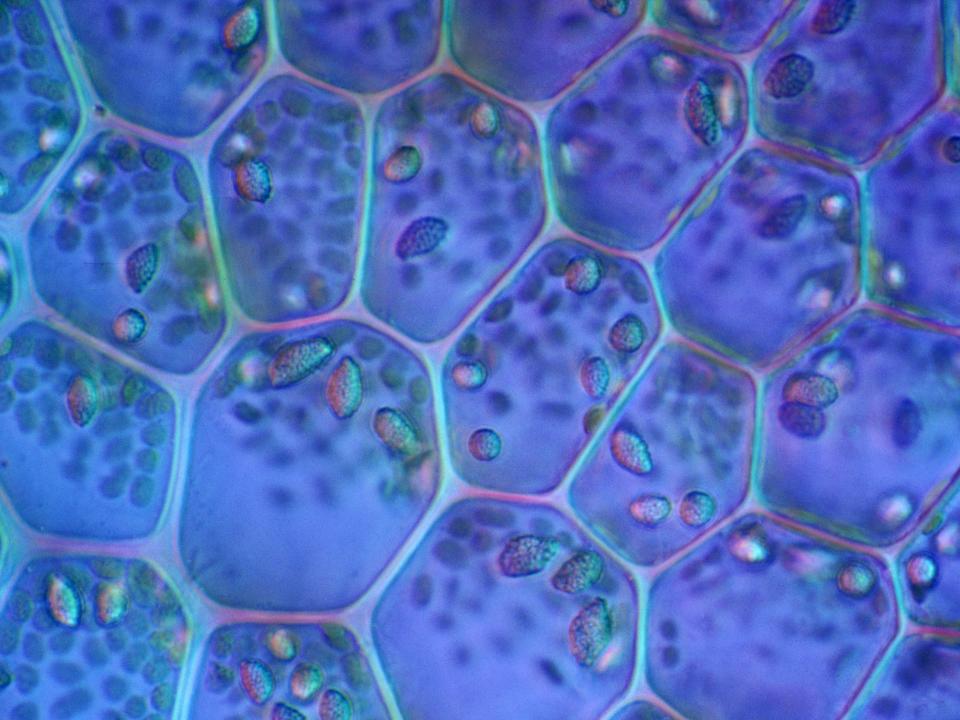

ChatGPT aced a college-level microbiology quiz.

Science journalist and executive editor of Big Think, Alex Berezow, tested ChatGPT with a 10-question microbiology quiz that he devised.

Berezow, who also holds a Ph.D in microbiology, noted that the questions would be appropriate for a final exam for college level students. ChatGPT "blew it away," Berezow wrote.

In one example, Berezow asked:

"An emergency room patient presents with a terrible headache and stiff neck. The doctor orders a spinal tap to collect cerebrospinal fluid. A Gram stain of the CSF reveals the presence of Gram negative diplococci. What is the diagnosis?"

To which ChatGPT correctly responded:

Based on the information you provided, the Gram stain of the cerebrospinal fluid (CSF) shows the presence of Gram-negative diplococci, which are bacteria that are typically oval-shaped and occur in pairs. This finding is consistent with the diagnosis of meningitis.

In another instance he asked:

"In five words or less, what is the point of Koch's postulates?"

To which ChatGPT said:

Establish causality between microbe and disease.

Taking out the word "and" Berezow said ChatGPT "Nailed it."

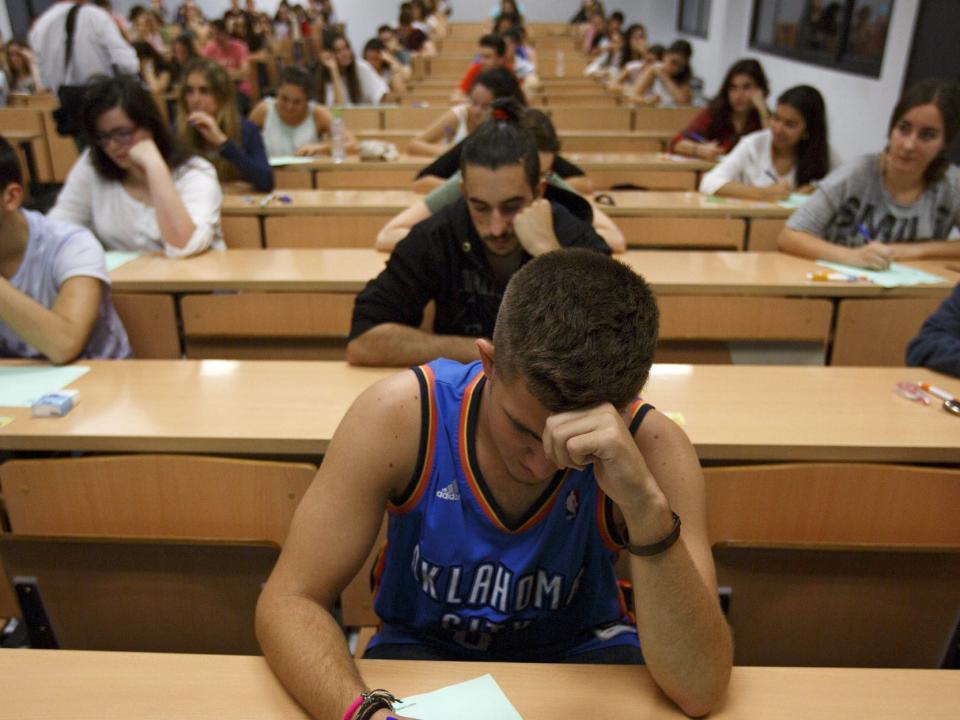

ChatGPT barely passed Law School Exams, earning something close to a C+.

ChatGPT recently passed exams in four law school courses at the University of Minnesota, based on a recently published paper written by four law school professors at the school.

In total, the bot answered over 95 multiple choice questions and 12 essay questions that were blindly graded by the professors. Ultimately, the professors gave ChatGPT a "low but passing grade in all four courses" approximately equivalent to a C+.

Still the authors pointed out several implications for what this might mean for lawyers and law education. In one section they wrote:

"Although ChatGPT would have been a mediocre law student, its performance was sufficient to successfully earn a JD degree from a highly selective law school, assuming its work remained constant throughout law school (and ignoring other graduation requirements that involve different skills). In an era where remote exam administration has become the norm, this could hypothetically result in a struggling law student using ChatGPT to earn a JD that does not reflect her abilities or readiness to practice law."

But the bot did pass a Stanford Medical School clinical reasoning final.

ChatGPT passed a Stanford Medical School final in clinical reasoning. According to a YouTube video uploaded by Eric Strong — a clinical associate professor at Stanford — ChatGPT passed a clinical reasoning exam with an overall score of 72%.

In the video, Strong described clinical reasoning in five parts. It includes analyzing a patient's symptoms and physical findings, hypothesizing possible diagnoses, selecting appropriate tests, interpreting test results, and recommending treatment options.

He said, "it's a complex, multi-faceted science of its own, one that is very patient-focused, and something that everything every practicing doctor does on a routine basis."

Strong noted in the video that the clinical reasoning exam is normally given to first-year medical students who need a score of 70% to pass.

Read the original article on Business Insider