Mathematicians Are Suddenly Rethinking the Equal Sign

All equals are not created equal—mathematicians sometimes play fast and loose.

In programming, equal signs mean different things, and variables have different types.

Turning intuitive math expertise into step-by-step coded proofs involves filling in some “holes.”

In a new preprint paper—which, for context, is not peer reviewed, and is more of an editorial or set of observations than a theory or study—mathematician Kevin Buzzard is grappling with a simple idea from coding that becomes a “thornier concept” when translated into math: what does the equal sign actually mean? And what does it not mean?

Buzzard has been in the news for his efforts to turn classic math proofs into code that can be verified by a computer, including Fermat’s Last Theorem. For him, as a classically trained mathematician, the world of computer code includes some surprises.

“Six years ago, I thought I understood mathematical equality,” Buzzard wrote in his paper. “I thought that it was one well-defined term, and that there was nothing which could be said about it which was of any interest to me as a working mathematician with a knowledge of, but no real interest in, the foundations of my subject. Then I started to try and do masters level mathematics in a computer theorem prover, and I discovered that equality was a rather thornier concept than I had appreciated.”

Buzzard learned something that every Coding 101 student learns pretty quickly, whether in class or by doing it wrong in their work. In coding, there are different kinds of equal, and you have to completely work through some of the steps the human mind easily skips over when doing math in order to code properly. “The three-character string ‘2 + 2,’ typed into a computer algebra system, is not equal to the one-character string ‘4’ output by the system, for example; some sort of ‘processing’ has taken place,” Buzzard wrote.

Before any “keyboard warriors” start trying to alert the world that this is overcomplicating things or somehow undermining tradition, it’s important to remember that just because something is an edge case, that doesn’t mean it isn’t important and worth discussing. And there’s actually a lot of nuance to be addressed in this particular discussion: should the same equal sign account for terms you’ve rounded up or down? Does the equal sign cover all of the necessary bases if we imply the passage of time between one side and the other (like, for example, how two chickens eventually become three)?

This is not a question of redefining anything in mathematics—it’s one of precision and intention.

Strongly and Loosely Typed

According to Buzzard, the meaning behind the equal sign leaves something to be desired in the “precise and intentional” department. In fact, he describes the current state of the symbol in his paper using a key term: “loose.” “In practice we use the concept of equality rather loosely, relying on some kind of profound intuition rather than the logical framework which some of us believe that we are actually working within.” In his chosen proof verifier software, known as the Lean system, steps must be defined much more discretely.

In our minds, and in the “minds” of the computers we’ve programmed, there are more steps taking place behind the scenes of an equation than what meets the eye—it’s just a matter of how we arrange them. Some programming languages, known collectively as the “strongly typed” languages, require you to specify what a variable is. An ‘x,’ for example, could be an integer, a long decimal number, or a string of mixed symbols like a password. Each of those categories is referred to as a “type,” and that type is stored along with the value of the variable. If you try to do a math operation, or equate two variables of different types, the coding language won’t allow it.

In some other languages (known as “loosely typed” languages), and in most actual mathematical practices, “type” is more contextual. Instead of checking the assigned type, the language checks whether what’s inside the variable can complete the math question being asked. When look at Buzzard’s example of 2+2=4, in our heads, we turn the string that is 2+2 into the integer that is 4. Then, we can compare the integers that now exist on both sides of the equal sign.

If that sounds like overthinking, you’re right—using the highly intuitive and context-adapting human mind, we can do it pretty much without thinking at all. But math is an abstract field of study, and computer programming is arguably even more so. The machines we rely on to solve complicated problems need a lot more direction than our flexible human minds, and Buzzard’s career project is converting human-written math proofs into all of the algorithmic steps that are required to code them with a computer.

That means taking every. Single. Step.

Back to Math Equality

In his paper, Buzzard uses mathematician Alexander Grothendieck as an example. Grothendieck was a luminary in 20th century math who laid out a number of foundational ideas in algebraic geometry. He arguably made his name by doing “loosely typed” mathematics—after all, algebraic geometry combines principles from disciplines that seem quite separately defined, like abstract algebra’s sets and geometry’s plots.

Buzzard points out a specific moment where Grothendieck doesn’t just loosely type—he asks math peers to understand when he uses an entirely new term, “canonically isomorphic,” as a new variety of equality. “Lean would tell Grothendieck that this equality simply isn’t true and would stubbornly point out any place where it was used. Let me emphasize once more: Grothendieck was well aware of what he was saying, but Lean would argue that he was confusing = and ≅,” Buzzard explained.

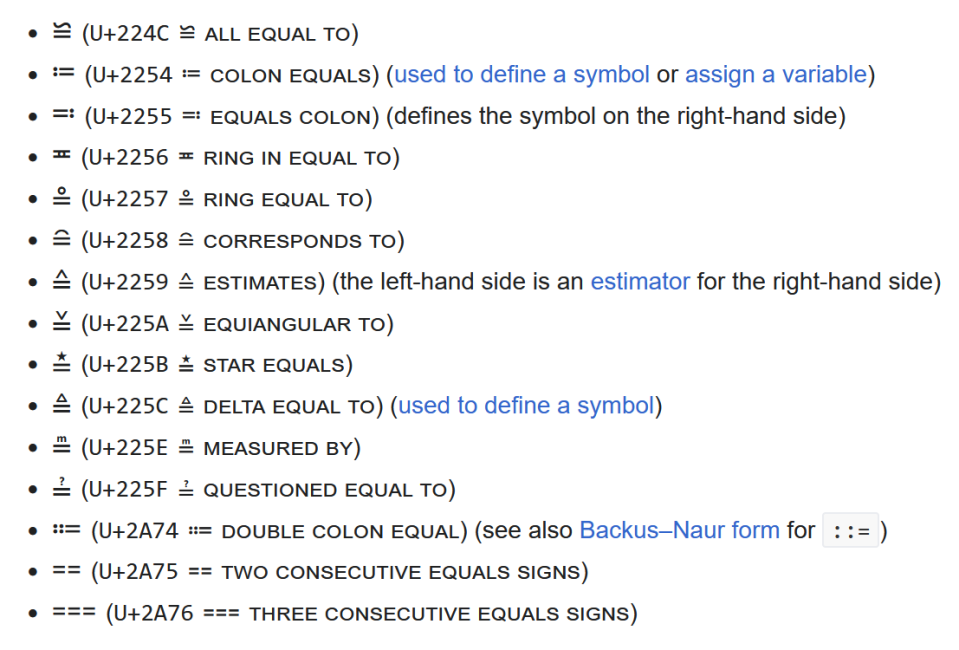

The latter of those two symbols is the congruence sign: a symbol which means that two sets of points form the exact same shape with the exact same size. If you delve into the full set of Unicode symbols (which includes virtually everything in the extended set of characters that a computer can display as type), there are dozens of versions of ideas like equality and congruence. Even ≌ and ≅ are different—look at how the “toothpaste” on top is oriented.

Buzzard concludes that if math wants to be ‘formalized’—in other words, to be seamlessly turned into the tiny individual steps represented by code and formal proofs—there are many skips or “holes” (like Grothendieck’s) that will need to be filled. He’s not claiming that any of this work isn’t correct—just that it isn’t yet fully broken down into all of the required steps.

Turning these holes into code will help the people who are building libraries in software like Lean. In the long run, Lean can improve mathematics if used well, because proofs of more and more complex math only grow... well... longer and longer. And it’s becoming far more common for mathematicians to regularly compare across subfields the way Grothendieck did, making it even more important to codify these comparisons for everyone’s understanding.

And Buzzard wants to start laying the groundwork for exactly that. “One useful trick is to abuse the equality symbol, making it mean something which it does not mean; this can trick the reader into thinking that nothing needs to be checked,” Buzzard explained.

As mathematicians continue to tip the scales with 100+ page proofs that even their colleagues aren’t able to parse, it’s probably a good idea to have as much certainty as possible about what equals what.

You Might Also Like