AI Creation ‘Our T2 Remake’ Is Groundbreaking, Even Though It’s Not a Good Movie

Outside, the rain was relentless; inside the lobby of Los Angeles’ NuArt Theater, so was the cheer. At a sold-out March 6 premiere for the crowdsource-funded “Our T2 Remake,” a full-length parody of “Terminator 2: Judgment Day” produced entirely with AI tools, the energy recalled Sundance circa 1995.

Among the supporters were Caleb Ward, co-founder of AI filmmaking education platform Curious Refuge and creator of last year’s viral AI short “Star Wars by Wes Anderson;” Dave Clark, who created another viral AI video with his Adidas spec and whose short film “Another” would precede the feature; Nem Perez, founder of AI storyboarding mobile app Storyblocker Studios and the director of “T2 Remake”; and Jeremy Boxer, the former Vimeo creative director who now runs consultancy Boxer and is the cofounder of community group Friends With AI.

More from IndieWire

With the audience seated, ready to applaud the AI creations, hosts Perez and executive producer Sway Molina took the stage for the first order of business: thanking the creators — and removing any hopes that the film might represent a major leap in cinema.

“Let’s set some expectations here, right?” Perez said with an embarrassed laugh. “Hopefully, some of you aren’t here to watch a remake of ‘Terminator.’ It’s far from it, guys, because we didn’t really quite do that. This is an experimental film —”

“Highly experimental,” Molina said.

“Highly experimental film, using experimental technology —”

“Highly experimental.”

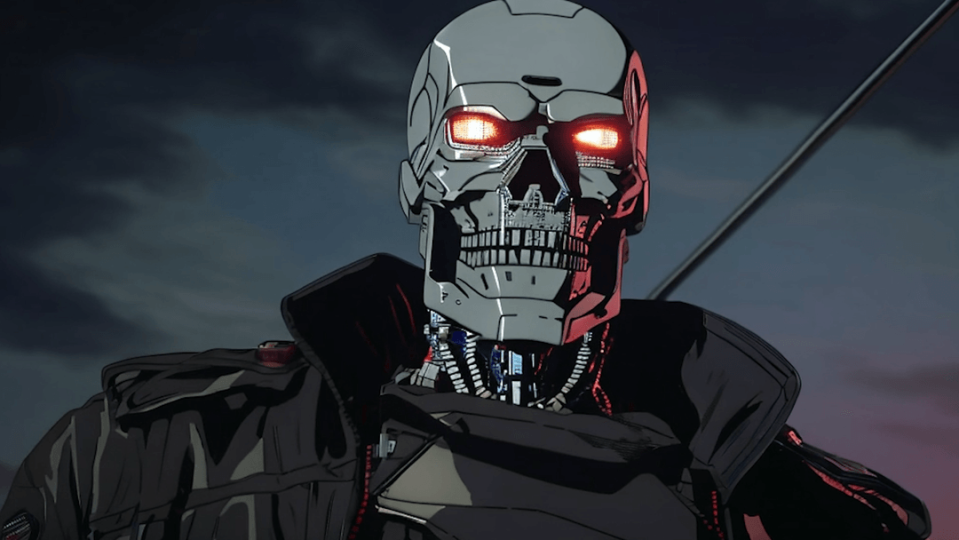

On screen, the soft-sell wasn’t false modesty. With global AI creators assigned to create 50 sections of the film across two weeks, few creative parameters (satirize ChatGPT world domination by Skynet in 2050, use no imagery or dialogue from the original), and varied levels of filmmaking talent, losing the familiar plot quickly became a foregone conclusion. Some images were impressive; others were diminished by the rapid-fire advances of AI. Even though the scenes were only six months old, more than a few had the dated vibe of “Flash Gordon” in a post-“Star Wars” world.

After the screening, Perez told IndieWire that the film achieved its goals as a showcase and served as a blow against what he called the “doomsday scenario” that can dominate AI discussions.

“Whenever anyone would bring up the topic of AI, they would bring up Skynet, which is the AI in ‘Terminator,’ and how it was going to be the end of the world, we’re all going to lose our jobs,” he said. “Meanwhile, the strikes were happening and it was a very polarizing time. A lot of people just weren’t seeing the positives of AI and how it was bringing this community together, and how people were creating really wonderful things.”

Each artist used a variety of mixed media styles (from glitchy t-pose models to Funko Pop figures), which transitioned every two to three minutes. (To maintain a cohesive storyline, the producers added brief interstitials from the original “T2” in post to help orient viewers.)

An open call recruited artists, primarily from X (fka Twitter). Among them were AI rock stars such as The Butcher’s Brain, Uncanny Harry, Jeff Synthesized, and Brett Stuart (who impressed with his polished-looking Teddy Bear Terminator made with the customized Laura AI model). Most artists used Midjourney and Runway platforms, and generators from Pika Labs (video), Kaiber (animation), Leonardo (image), and Suno (music).

Perez devised a collaborative workflow that had artists select scenes through a web store set up like a limited-release NFT drop, with assigned channels to post their progress. Sound, for the most part, was pulled from a licensed stock website. They were also invited to a private Discord for post-production collaboration.

The scenes ran the gamut of live-action and animation parodies (including a “Star Wars” riff on “Law and Order”) dominated by different stylistic interpretations of Arnold Schwarzenegger’s iconic T-800 Terminator unit. Another reason for the film’s inconsistent look was the producers ran two waves of artists with a lull in between, which let them take advantage of rapidly improving tool sets.

Perez and Molina contributed their own scenes as well. “I was thinking about ‘Robot Chicken’ and doing a sitcom parody with a Funko Pop toy,” Molina said. “And the first thing that came into my mind was Mr. T as the Terminator. It starts with a Mr. T-800 jingle, where his head is popping from everywhere. That was actually a childhood inspiration by the intro to the classic ‘Donald Duck’ shows. I was writing and editing and generating images all at once. That’s kind of like the beauty of AI.”

Perez contributed the opening drone and spaceship attack, which was the last scene completed, and the climactic faceoff between T-800 and T-1000. “For the opening, I did a lot of visual effects compositing and 3D work,” he said. “I added a couple of 3D spaceships or hunter drones that were flying around in that scene because movement is tough for AI, and the only way to do that was with 3D. Then I used compositing for the background so that the fire can move a little bit more lifelike, and so the smoke and the fog effects added a little bit more life to each shot.”

He also incorporated his own performance. “In the Terminator fight scene, I used something called Gen-1, which is where you add an effect on top of things that you shoot,” Perez said. “I dressed up like the T-800 and the T-1000, bought a leather jacket and a police uniform on eBay, and acted out both scenes on a green screen. I’m a big video game nerd and I grew up in the ’90s playing ‘Mortal Kombat’, so it was a play on the video game where the two faced off.”

Perez said AI is still in the early stages of its Wild West, which will become increasingly sophisticated as artists experiment with rapidly improving tools. Meanwhile, his Storyblocker app uses it to help visualize scripts.

“Personally, I don’t think that films like ‘T2’ are going to replace traditional filmmaking,” he said. “The biggest [weakness] is character consistency, making sure that your characters look exactly the same in every shot with their wardrobe and hair. These are tough because the AI interprets it differently every time you prompt it.” He also described the film’s lip-syncing as “atrocious,” due to the tools then available; these have rapidly improved.

“The other thing is camera control, which didn’t exist when we started,” said Perez. “These are mostly still images with slight movement, either push-ins or pans, but you’re not going to do a crane-down shot into a dolly push move or anything too complex. But this is improving. Runway has introduced more camera controls, but you’re still limited to a four-second scene. Anything after that and the character falls apart.”

Even on their truncated timline, Molina said they were all too aware that tools were becoming obsolete under their fingertips. “We were tempted to make changes, to go back and fix things, but we decided not to,” he said. “We’re making history here. This is a time capsule, so let’s leave it as is. As we’ve seen already in the last six months while making this movie, the quality and control has just gotten phenomenal.”

Best of IndieWire

The Best Thrillers Streaming on Netflix in March, from 'Fair Play' to 'Emily the Criminal'

The Best Picture Winners of the 21st Century, Ranked from Worst to Best

The 65 Best Sci-Fi Movies of the 21st Century, from 'Melancholia' and 'M3GAN' to 'Asteroid City'

Sign up for Indiewire's Newsletter. For the latest news, follow us on Facebook, Twitter, and Instagram.