The Heated Debate Over Who Should Control Access to AI

Credit - Illustration By James Steinberg for TIME

In May, the CEOs of three of the most prominent AI labs—OpenAI, Google DeepMind, and Anthropic—signed a statement that warned AI could be as risky to humanity as pandemics and nuclear war. To prevent disaster, many AI companies and researchers are arguing for restrictions on who can access the most powerful AI models and who can develop them in the first place. They worry that bad actors could use AI models to create large amounts of disinformation that could alter the outcomes of elections, and that in the future, more powerful AI models could help launch cyberattacks or create bioweapons.

But not all AI companies agree. On Thursday, Meta released Code Llama, a family of AI models built on top of Llama 2, Meta’s flagship large language model, with extra training to make them particularly useful for coding tasks.

The largest, most capable models in the Code Llama family outperform other openly available models at coding benchmarks, and nearly match GPT-4, OpenAI’s most capable large language model. Like the other AI models Meta develops, the Code Llama language models are available for download and are free for commercial and research use. In contrast, most other prominent AI developers, such as OpenAI and Anthropic, only allow businesses and developers limited, paid access to their models, which the AI labs say helps to prevent them from being misused. (It also helps to generate revenue.)

For months, Meta has been plotting a different path to the other large AI companies. When Meta released its large language AI model LLaMA on Feb. 24, it initially granted access to researchers on a case-by-case basis. But just one week later, LLaMa’s weights—the complete mathematical description of the model—were leaked online.

Read More: 4 Charts That Show Why AI Progress Is Unlikely to Slow Down

In June, U.S. Senators Richard Blumenthal and Josh Hawley, Chair and Ranking Member of the Senate Judiciary Subcommittee on Privacy, Technology, and the Law, wrote a letter to Mark Zuckerberg, CEO of Meta, noting that “Meta appears not to have even considered the ethical implication of its public release” and seeking information about the leak and asking whether Meta would change how it released AI models in future.

A month later, Meta answered in emphatic fashion by releasing Llama 2. This time, Meta didn’t make any attempt at all to limit access—anyone can download the model.

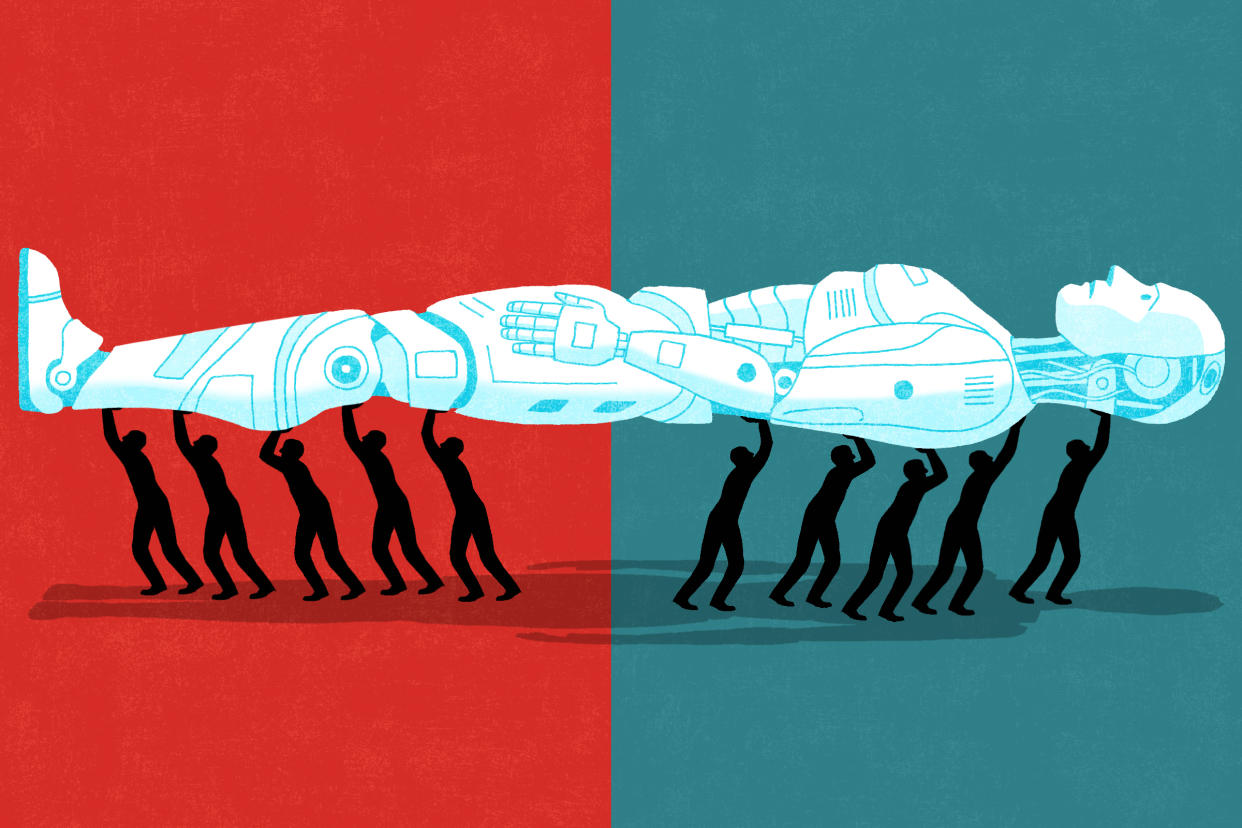

The disagreement between Meta and the Senators is just the beginning of a debate over who gets to control access to AI, the outcome of which will have wide-reaching implications. On one side, many prominent AI companies and members of the national security community, concerned by risks posed by powerful AI systems and possibly motivated by commercial incentives, are pushing for limits on who can build and access the most powerful AI systems. On the other, is an unlikely coalition of Meta, and many progressives, libertarians, and old-school liberals, who are fighting for what they say is an open, transparent approach to AI development.

Frontier model regulators

In a paper titled Frontier Model Regulation, published in July on OpenAI’s website, a group of academics and researchers from companies including OpenAI, Google and DeepMind lay out a number of ways in which policymakers could achieve this. The first two types of intervention described—developing safety standards and ensuring regulators have sufficient visibility—are relatively uncontroversial.

But the paper also suggests ways that regulators could ensure AI companies are complying with the safety standards and visibility requirements. One such way is to require AI developers to obtain a license before training and deploying the most powerful models, also referred to as frontier models. When Sam Altman, CEO of OpenAI, proposed a licensing regime in a Senate Committee hearing in May, many commentators disagreed. Clément Delangue, CEO of Hugging Face, a company that develops tools for building applications using machine learning and is supportive of the open-source approach, argued that licensing could increase industry concentration and harm innovation.

The paper can be read as a statement of support for the broad approach outlined in the paper by those companies. In a comment on an internet forum, Markus Anderljung, head of policy at the Centre for the Governance of AI and one of the the lead authors of the paper, explained that although he thinks a licensing regime is probably required, the recommendations of the paper were made less definitive in order to ensure that a number of authors were represented.

However, even among the AI companies that are concerned about extreme risks from powerful AI systems, support for licensing is not unanimous. A source at Anthropic familiar with the deliberations about the paper among the labs tells TIME that there was consensus within Anthropic that it was too early to discuss licensing, and that Anthropic chose not to feature among the co-authors as a result of the focus on licensing over other potential measures such as tort liability. Jack Clark, one of the co-founders of Anthropic, has previously said that licensing would be “bad policy.”

Open-source promoters

In a blog post that accompanied the release of Llama 2, Meta explained that they favor an open approach because it is more likely to promote innovation and because an open approach would actually be safer because open-sourcing AI models means that “developers and researchers can stress test them, identifying and solving problems fast, as a community.”

Researchers in the field of AI safety have argued that, although disclosure seems to benefit cyber-defense more than it does cyber-offense, this may not be the case for AI development. Meanwhile, recent research also argues that Meta’s approach to AI model deployment is not fully open, because its license prohibits larger scale commercial usage, and that Meta benefits commercially from this approach because it benefits from improvements to its models made by the open-source community.

Meta’s leadership is also not convinced that powerful AI systems could pose existential risks. Mark Zuckerberg, co-founder and CEO of Meta, has said that he doesn’t understand the AI doomsday scenarios, and that those who drum up these scenarios are “pretty irresponsible.” Yann LeCun, Turing Award winner and chief AI scientist at Meta has said that fears over extreme AI risks are “preposterously stupid.”

Joelle Pineau, vice president of AI research at Meta, told TIME that a commitment to open-source meant Meta held itself to higher standards and was able to attract more talented researchers. Pineau acknowledged that Meta’s models could be weaponized, but drew an analogy to cybersecurity, in which security is maintained because the number of good actors outweigh the bad actors.

“When you put the model out there, yes, you create an opportunity for someone in the basement to try to create something with it,” she says. “You also create an opportunity for thousands of people to help make the model better.”

Meta is one of the only prominent AI developers to fully back the open-source approach, but many less well-resourced groups also want to preserve open access to AI systems. Yacine Jernite, machine learning and society lead at Hugging Face, is increasingly concerned about the lack of transparency and accountability that the companies developing AI currently face, a concern shared by many progressives.

“The main operational question is who should have agency and who should be making decisions about how technology is shaped,” Jernite says. “And there's two approaches to that: One is saying we're going to build this company, where we have people who we think are good people because we hired them. And because they're good people, they're going to make good technology, and they should be trusted to make that technology. Another approach is to look at democratic processes… Part of democracy is having processes to have more visibility, more understanding, and more power rebalancing.”

Read more: Why Timnit Gebru Isn’t Waiting for Big Tech to Fix AI's Problems

Jeremy Howard, cofounder of fast.ai, a non-profit AI research group, has argued for classical liberal values on questions of AI governance, contending that restricting access to AI systems would centralize power to such an extent that it would risk “rolling back the gains made from the Age of Enlightenment.”

Adam Thierer, a senior fellow at the center-right, pro-free market think tank R Street Institute, has written about how licensing and other efforts to regulate AI could stifle innovation. Thierer also points out the “Orwellian” levels of surveillance that would be required in order to prevent unpermitted AI developers training frontier systems, and is skeptical that other countries, China in particular, would follow the same rules.

The battle ahead

As it currently stands, the E.U. AI Act, which is working its way through the legislative process, would require developers of “foundation models”—AI models which are capable of performing a wide range of tasks, such as Llama 2 and GPT-4—to ensure their systems conform to a range of safety standards.

The Act wouldn’t impose a licensing regime, but could impede open-sourcing in other ways, says Nicolas Moës, director of European AI governance at the Future Society. Unlike the frontier model definition favored by many in the U.S., the foundation model definition would apply to all general AI models, not just the most powerful ones.

Large companies like Meta should be able to comply with the safety standards relatively easily, and the effects on smaller open-source developers will be offset by reduced liabilities for smaller organizations, says Moës. Still, in July, a group of organizations including Hugging Face and Eleuther AI, a non-profit research lab, released a paper making recommendations for how the E.U. AI Act can support open-source AI development, including setting proportional requirements for foundation models.

Read more: Big Tech Is Already Lobbying to Water Down Europe's AI Rules

The frontier model regulation paper suggests that many industry leaders will be calling for a different approach in the U.S. to that taken in the E.U., by pushing for a licensing regime. Meta and others in the coalition that favors a more open, transparent approach to AI development may push for carve-outs for open-source developers.

Senate Majority Leader Chuck Schumer unveiled his proposed approach to AI regulation in June, calling on Congress to act swiftly. But Congress is only one of the battlegrounds in the coming debate. “I think all of the action is in the agencies, in the courts, at the state level in the United States,” says Thierer. “And then in the world of NGOs and business developments and the governance thereof.”

Contact us at letters@time.com.