How helpful are mental health apps?

The global mental health apps market is projected to hit $17.5 billion by 2030 as demand continues to soar, but critics are starting to question the cost to users.

The market, which includes virtual therapy, mental health coaches, digitised CBT and chatbot mood trackers, experienced an "upsurge" during the Covid-19 pandemic, according to Grand View Research. It was worth about $6.2 billion last year and is expected to continue increasing by an annual 15.2% over the next six years.

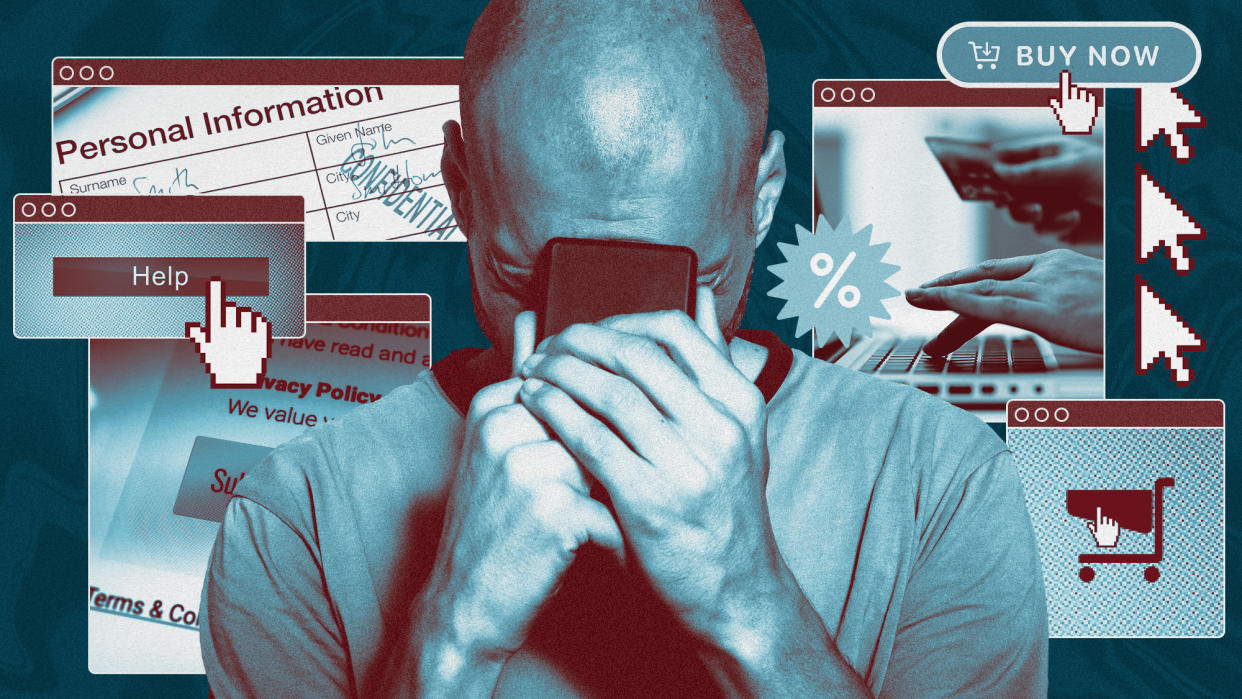

But scrutiny is also growing, amid concerns about a dearth of regulation or universal standards, which could expose users to unlicensed practitioners. Another worry is what happens to the "considerable amounts of deeply sensitive data they gather", said The Observer.

'Privacy not included'

Mental health apps "redefine accessibility", said TechRound. They're "advantageous for those with busy routines, living in remote settings, or encountering obstacles in attending face-to-face appointments, extending the reach of mental health care". Many are either free or low-cost, making mental health care more affordable and accessible.

This week, a study concluded that a mental health assessment interview powered by artificial intelligence was as good at assessing symptoms of depression as the "gold standard" questionnaires in use across much of US healthcare. The technology could "help mitigate the national shortage of mental health professionals" and excessive wait times, said researchers at The University of Texas at Austin. It could be a "game-changer".

Most psychiatry experts agree that so far "there has been little in the way of hard evidence" to show that any of these new self-help tools actually help, said The Observer.

But there is growing evidence that they could harm users' privacy. A December 2022 study of 578 mental health apps, published in the Journal of the American Medical Association, found that 44% shared users' data with third parties.

Last year, the US Federal Trade Commission fined arguably the most prominent mental health platform BetterHelp $7.8 million (£6.1 million). It found that the California-based company had shared sensitive data with third parties for advertising purposes, after promising to keep the information private. (BetterHelp did not respond to The Observer's request for comment.)

This is far from "an isolated exception", said the paper. Last year, independent non-profit watchdog Mozilla slapped 29 out of 32 mental health apps surveyed with "privacy not included" warning labels, for failing to protect data.

'Worse than any other product'

Mozilla began assessing these apps in 2022, after they surged in popularity during the pandemic. Private mental health struggles "were being monetised", Jen Caltrider, director of Mozilla's "Privacy Not Included" research, told The Observer.

It seemed as though many apps "cared less about helping people and more about how they could capitalise on a gold rush of mental health issues", said Caltrider. What the organanisation found "was way worse than we expected".

Mozilla researchers described mental health apps as "worse than any other product category" when it came to privacy. The "worst offenders are still letting consumers down in scary ways, tracking and sharing their most intimate information and leaving them incredibly vulnerable", Caltrider said.

Some of this is "completely legal" in the US, said Yahoo Finance. The US lacks a national privacy law, while its "primary healthcare privacy law", the Health Insurance Portability and Accountability Act (HIPAA) of 1996, does not apply to many mental health apps. It only applies to information shared between a doctor and their patient.

But "the most worrying question", said The Observer, "is whether some apps could actually perpetuate harm and exacerbate the symptoms of the patients they’re meant to be helping".

The AI chatbot companion app Replika was "one of the worst apps Mozilla has ever reviewed", its researchers said. One user of the popular app posted a screenshot of a conversation, in which the bot "appeared to actively encourage his suicide attempt", said The Observer.